Syntax Highlighting

Yesterday, I finally got around to publishing my OPL support for highlight.js. I’ve been using it here since I wrote it a couple of months ago, but now it’s available on GitHub and NPM for others to use.

PROC hello:

PRINT "OPL is amazing!"

GET

ENDP

Just like highlight.js itself, it’s incredibly easy to use, and there are a few different options.

You can use the module directly (what I do on this website):

import hljs from '../highlight.js/es/highlight.js';

import opl from './highlightjs-opl/src/languages/opl.js';

hljs.registerLanguage('opl', opl);

hljs.highlightAll();

Or add the minified self-registering version from the UNPKG CDN to your site’s head (how I’m using it on the OpoLua website):

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/11.11.1/styles/default.min.css">

<script src="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/11.11.1/highlight.min.js"></script>

<script type="text/javascript" src="https://unpkg.com/highlightjs-opl/dist/opl.min.js"></script>

<script type="text/javascript">

hljs.highlightAll();

</script>

I’m looking forward to seeing all your OPL projects!

Publishing OpoLua for Debian and Ubuntu

Ever since Tom decided to bring OpoLua—our modern runtime for OPL—to Linux with the introduction of a Qt-based desktop app, I’ve wanted to make it as easy to install and update as the macOS and iOS apps. This week I did that for Debian and Ubuntu by setting up a self-hosted apt repository for the software we publish—if you’re using a Debian-based system and you’ve not yet tried out OpoLua, you can get started with the following simple commands:

curl -fsSL https://releases.jbmorley.co.uk/apt/public.asc | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/jbmorley.gpg

echo "deb https://releases.jbmorley.co.uk/apt $(lsb_release -sc) main" | sudo tee /etc/apt/sources.list.d/jbmorley.list

sudo apt update

sudo apt install opolua

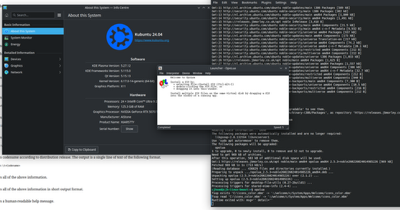

I’ve successfully installed OpoLua on Debian Trixie, and Ubuntu Noble and Questing and, thanks to Colin, we have evidence that it even works on Kubuntu:

The new apt repositories are built on top of our existing GitHub CI: releases on GitHub now include manifest files that contain metadata about which platforms and architectures binaries and packages support, and these files are then used to determine what to include in the apt repository. Unlike many other GitHub-based solutions I’ve seen, I plan to host historical builds of OpoLua (and other projects) to ensure folks can access builds for their OS for as long as possible, even if we’ve had to drop it to enable new features. (Though thankfully that shouldn’t be necessary with OpoLua as Qt seems to have an amazing backwards compatibility story.)

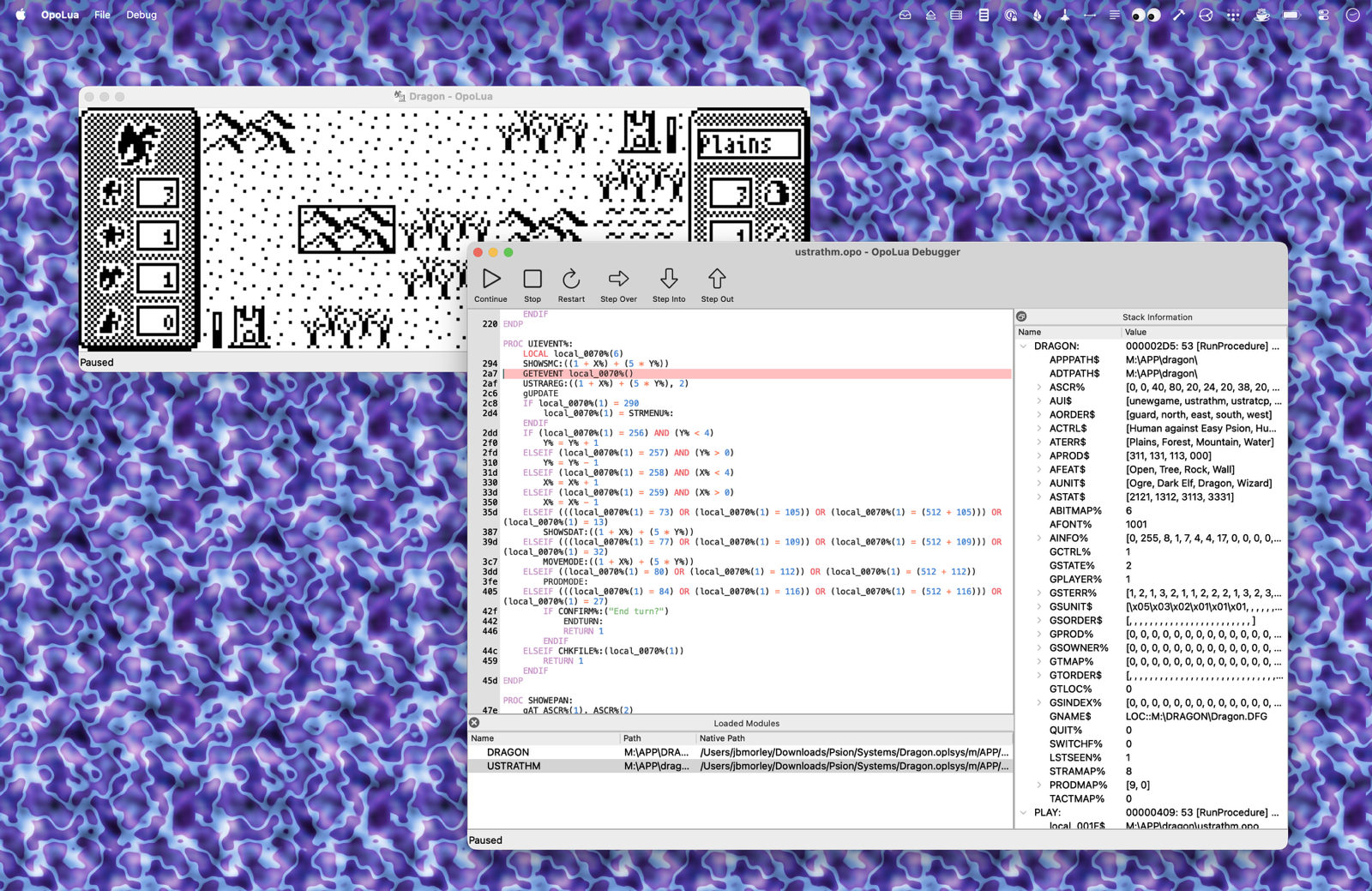

In addition to the new repository, there have been significant developments in OpoLua over the past few months, with Tom landing a huge feature last week in the form of an interactive decompiler and debugger, complete with breakpoints and live updates:

Peeking at the inner workings of Cyningstan’s recent release for the Series 3, Dragonfell.

And finally, OpoLua wasn’t the only software to receive a release this week: the new apt repository also contains Debian and Ubuntu builds of Reporter, my lightweight file change report generator.

Rethinking Writing

Coming off the back of my December Adventure, I was incredibly keen to continue a practice of daily writing—I found the process deeply rewarding and a wonderful opportunity to reflect on my work, share my process, and be more deliberate about how I approach my projects. Now, nearly a month since I last wrote, it’s clear that, while this works well in the context of adventuring, it doesn’t translate to my typical way of working. I’d like to reflect on why.

During my December Adventure, I deliberately selected relatively short, self-contained tasks that were fun or immediately rewarding—things I felt others in the Psion community might enjoy reading about—and, consequently, that made the process of writing a joy. The work of exploring and supporting older computers is also wonderfully broad, offers a wide variety of problems, and is charmingly pure when contrasted with the myriad cultural and political shifts happening in the industry and world at large. Simply put: it is something I am happy and excited to engage in and share.

As I’ve transitioned back to more work-like tasks, I find I’m taking on bigger projects in more contemporary languages that are, frankly, less fun—what I’m doing now is driven more by the outcome than the joy of the journey. Specifically, I’ve been focused on infrastructural work in both Folders, my Photos-like file manager for macOS, and Reconnect, my Psion connectivity suite for macOS. This work has necessitated spending many days bashing my head against Swift and SwiftUI, and it’s not fun to write about the endless process of working around Apple’s phoned-in APIs and absentee-parent approach to language design. It’s also much harder, working on modern platforms, to ignore the spectre of AI and how it’s fundamentally changing the culture of creation—a shift that only seems to have accelerated in the past couple of months, and one that quells the deep enthusiasm and optimism I usually hold for engineering.

With all this in mind, I’m going to go back to more ad-hoc writing, highlighting some of the larger pieces of work when I complete them, and documenting my little side-quests as and when they occur. I also hope to take some time to sit down and write about my evolving feelings around AI—specifically how it continues a decades-long trend of abdicating responsibility in product and infrastructure design.

(I note that Eli has designated the Ides of March a week of adventuring, something I suspect many of us desperately need. I have plans.)